This post has been a long tine coming... I wrote it back in May 2015, and somehow in the middle of things, I forgot to hit "publish." While we have done quite a bit of work with this model since then, maybe you'll still enjoy our crazy analogy to playing dice with particles at the mesoscale...

Some time ago, I published what might seem as yet another paper describing the properties of our model for (coarse-grained) large-scale macromolecules. A critical part of the model is that we roll dice every time these particles collide so as to decide whether they bounce or go through each other. They can overlap, because at intermediate length scales, they don't behave like rocks even if they occupy space. Despite our simple (and dicey) model, in our earlier papers, we showed that our particles give rise to the same structure as the corresponding particles that would interact through typical (so-called soft) interactions. But Einstein's famous quote about God not playing dice with the universe (albeit in a different context) serves as a warning that our particles might not move in analogous ways to those driven by Newton's deterministic laws. In our most recent paper, we confirmed that our particles (if they live in one dimension) do recover deterministic dynamics at sufficiently long (that is, coarse-grained) length scales. That's a baby step towards using our model in human-scale (three) dimensions. So there are more papers to come!

The work was performed (and the paper was written) with my recent Ph.D. graduate, Dr. Galen Craven, and a Research Scientist, Dr. Alex Popov. It's basic research and I'm happy to say that It was supported by the National Science Foundation. The title of the article is "Stochastic dynamics of penetrable rods in one dimension: Entangled dynamics and transport properties," and it was recently published at J. Chem. Phys. 142, 154906 (2015).

Showing posts with label NSF. Show all posts

Showing posts with label NSF. Show all posts

Sunday, March 24, 2019

Sunday, March 17, 2019

Engineered gold nanoparticles can be like ice cream scoops covered in chocolate sprinkles

There are many ways to interrogate molecular phenomenon. You might think that this is restricted to physical measurements such as direct observation with a microscope, a laser, or more seemingly arcane observation with nuclear magnetic resonance (NMR). But I’m happy to report that observation of computer simulations is yet another, as long as our models are sufficiently accurate that they mimic reality perfectly. In today’s age when it’s hard to see the difference between CGI and real humans, this may not sound surprising. Nevertheless, the question is what can we learn from observation of real and simulated systems in tandem?

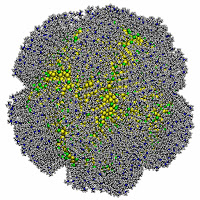

I’m happy to report that my student Gene Chong and Cathy Murphy’s student Meng Wu did precisely this parallel study. Gene made simulations of a simpler system, involving nanoparticles covered by lipids called MUTABs. Meng made NMR measurements of nanoparticles covered by a similar but somewhat longer lipids called MTABs. (Note that if you are worried about the term nuclear in NMR, as in nuclear energy, don’t be. We are just looking at the positions of the nuclei, not spitting them apart. It was the concern over this misunderstanding that led to the use of such a device to look at your body in detail to be called MRI instead of NMR!) The happy result was that the two observations agreed. But only together did Meng’s and Gene’s observations show clearly that the lipids didn’t always cover the nanoparticle smoothly like melted chocolate on ice cream, but rather assembled like sprinkles all pointing out in the same direction packed together in different islands on the surface. This structure means that lipid-decorated nanoparticles will have shape and response to other systems that you might not otherwise anticipate. And this opens the question to our next set of investigations as we chart a course to understand the interactions between nanoparticles and biological components such as membranes.

If you want more detail, check out our article in JACS, just recently published! That is, JACS 141, 4316 (2019), and I'm happy to report that it was funded by the NSF CCI program for our Center for Sustainable Nanotechnology.

I’m happy to report that my student Gene Chong and Cathy Murphy’s student Meng Wu did precisely this parallel study. Gene made simulations of a simpler system, involving nanoparticles covered by lipids called MUTABs. Meng made NMR measurements of nanoparticles covered by a similar but somewhat longer lipids called MTABs. (Note that if you are worried about the term nuclear in NMR, as in nuclear energy, don’t be. We are just looking at the positions of the nuclei, not spitting them apart. It was the concern over this misunderstanding that led to the use of such a device to look at your body in detail to be called MRI instead of NMR!) The happy result was that the two observations agreed. But only together did Meng’s and Gene’s observations show clearly that the lipids didn’t always cover the nanoparticle smoothly like melted chocolate on ice cream, but rather assembled like sprinkles all pointing out in the same direction packed together in different islands on the surface. This structure means that lipid-decorated nanoparticles will have shape and response to other systems that you might not otherwise anticipate. And this opens the question to our next set of investigations as we chart a course to understand the interactions between nanoparticles and biological components such as membranes.

If you want more detail, check out our article in JACS, just recently published! That is, JACS 141, 4316 (2019), and I'm happy to report that it was funded by the NSF CCI program for our Center for Sustainable Nanotechnology.

Monday, August 31, 2015

Sustainable Nanotechnology - Designing green materials in the nanoparticle age

The birth control pill turned 50 recently, and it was a reminder of the great power of a chemical compound, estrogen, to affect social and political change. A little less attention was given to the role that estrogen levels in our water streams have had on fish in water streams. (See for example, a Scientific American article from 2009 on the possible implications of estrogen in waterways. ) There’s some debate as to where the leading sources of estrogen come from. While most studies indicate that the birth control pill is not the major contributor to its presence in the waterways, there is no doubt that estrogen pollution exists. Regardless, when the birth control pill was introduced, I suspect that few even considered the possibility that estrogen would be a factor in the health of fish in waterways such as the Potomac and Shenandoah rivers.

In this century, there is little doubt that nanoparticles comprise a class of chemical compounds that are revolutionizing nearly everything that we touch, see or smell. Indeed, I am tempted to argue that this century might be called the “nanoparticle age” in the same way that history named the last century as the “industrial age.” The challenge to chemists (and material scientists) is not just designing nanoparticles to solve particular problems, but to do so with materials that have no unintended consequences. Anticipating such unknown unknowns is a grand challenge, and the solution requires a team of scientists with expertise in making, measuring, and modeling the nanoparticles in the upstream design side and in biology and ecology on the downstream side. The Center for Sustainable Nanotechnology (CSN) is taking this challenge head-on. I’m happy and exited to say that I have joined the CSN as part of the modeling team!

In this century, there is little doubt that nanoparticles comprise a class of chemical compounds that are revolutionizing nearly everything that we touch, see or smell. Indeed, I am tempted to argue that this century might be called the “nanoparticle age” in the same way that history named the last century as the “industrial age.” The challenge to chemists (and material scientists) is not just designing nanoparticles to solve particular problems, but to do so with materials that have no unintended consequences. Anticipating such unknown unknowns is a grand challenge, and the solution requires a team of scientists with expertise in making, measuring, and modeling the nanoparticles in the upstream design side and in biology and ecology on the downstream side. The Center for Sustainable Nanotechnology (CSN) is taking this challenge head-on. I’m happy and exited to say that I have joined the CSN as part of the modeling team!

Please also check out the announcement of the start of the 5-year effort of the CSN through an NSF CCI Phase II grant CHE-1503408.

Tuesday, June 16, 2015

Sustainable Nano on Open Access Sustainably

(This article is a cross-post between EveryWhereChemistry and Sustainable-Nano!)

Sustainability’s future is now. Our recent article was just published in an all-electronic journal, ACS Central Science, which is among the first of the American Chemical Society (ACS) journals offered without a print option. It therefore embodies sustainability as it requires no paper resources, thereby limiting the journal’s carbon footprint to only what is required for maintaining the information electronically in perpetuity. It is also completely Open Access, which means our article is available for all to read. Does this equal accessibility (called “flat” because there is no hierarchy in levels of access) amount to yet another layer of sustainability? More on that question in a moment. Meanwhile as the article itself is about sustainability, it embodies the repetitive word play in the title of this post.

But there is another double meaning in the publishing of this work: The flatness underlying the vision of Open Access is also at play in how the work was done. ELEVEN different research groups were involved in formulating the ideas and writing the paper. This lot provided tremendous breadth of expertise, but the flatness in the organizational effort allowed us to merge it all together. Of course, it wouldn’t have happened without significant leadership, and Cathy Murphy, the paper’s first author, orchestrated us all magnificently. While flatness in organizational behavior isn’t typically considered part of sustainability, in this case it provided for the efficient utilization of resources (that is, ideas) across a broader cohort.

So what is our article about? Fifteen years into the 21st century, it is becoming increasingly clear that we need to develop new materials to solve the grand challenges that confront us in the areas of health, energy, and the environment. Nanoparticles are playing a significant role in new material development because they can provide human-scale effects with relatively small amounts of materials. The danger is that because of their special properties, the use of nanoparticles may have unintended consequences. Thus, many in the scientific community, including those of us involved in writing this article, are concerned with identifying rules for the design and fabrication of nanoparticles that will limit such negative effects, and hence make the particles sustainable by design. In our article, we propose that the solution of this grand challenge hinges on four critical needs:

1. Chemically Driven Understanding of the Molecular Nature of Engineered Nanoparticles in Complex, Realistic Environments

2. Real-Time Measurements of Nanomaterial Interaction with Living Cells and Organisms That Provide Chemical Information at Nanometer Length Scales To Yield Invaluable Mechanistic Insight and Improve Predictive Understanding of the Nano−Bio Interface.

3. Delineation of Molecular Modes of Action for Nanomaterial Effects on Living Systems as Functions of Nanomaterial Properties

4. Computation and Simulation of the Nano−Bio Interface.

In more accessible terms, this translates to: (1) It’s not enough to know how the nanoparticles behave in a test tube under clean conditions as we need to know how they might behave at the molecular scale in different solutions. (2) We also need to better understand and measure the effects of nanoparticles at contact points between inorganic materials and biological matter. (3) Not only do we need to observe how nanoparticles behave in relation to living systems, but to understand what drives that behavior at a molecular level. (4) In order to accelerate design and discovery as well as to avoid the use of materials whenever possible, we also need to design validated computational models for all of these processes.

Take a look at the article for the details as we collectively offer a blueprint for what research problems need to be solved in the short term (a decade or so), and how our team of nanoscientists, with broad experience in making, measuring, and simulating nanoparticles in complex environments, can make a difference.

The title of the article is "Biological Responses to Engineered Nanomaterials: Needs for the Next Decade.” The work was funded by the NSF as part of the Phase I Center for Sustainable Nanotechnology (CSN, CHE-124051). It was just released at ACS Central Science, XXXX (2015) as an ASAP Article. The author list is C. Murphy, A. Vartanian, F. Geiger, R. Hamers, J. Pedersen, Q. Cui, C. Haynes, E. Carlson, R. Hernandez, R. Klaper, G. Orr, and Z. Rosenzweig,

It’s available as Open Access right now at http://dx.doi.org/10.1021/acscentsci.5b00182

Sustainability’s future is now. Our recent article was just published in an all-electronic journal, ACS Central Science, which is among the first of the American Chemical Society (ACS) journals offered without a print option. It therefore embodies sustainability as it requires no paper resources, thereby limiting the journal’s carbon footprint to only what is required for maintaining the information electronically in perpetuity. It is also completely Open Access, which means our article is available for all to read. Does this equal accessibility (called “flat” because there is no hierarchy in levels of access) amount to yet another layer of sustainability? More on that question in a moment. Meanwhile as the article itself is about sustainability, it embodies the repetitive word play in the title of this post.

But there is another double meaning in the publishing of this work: The flatness underlying the vision of Open Access is also at play in how the work was done. ELEVEN different research groups were involved in formulating the ideas and writing the paper. This lot provided tremendous breadth of expertise, but the flatness in the organizational effort allowed us to merge it all together. Of course, it wouldn’t have happened without significant leadership, and Cathy Murphy, the paper’s first author, orchestrated us all magnificently. While flatness in organizational behavior isn’t typically considered part of sustainability, in this case it provided for the efficient utilization of resources (that is, ideas) across a broader cohort.

So what is our article about? Fifteen years into the 21st century, it is becoming increasingly clear that we need to develop new materials to solve the grand challenges that confront us in the areas of health, energy, and the environment. Nanoparticles are playing a significant role in new material development because they can provide human-scale effects with relatively small amounts of materials. The danger is that because of their special properties, the use of nanoparticles may have unintended consequences. Thus, many in the scientific community, including those of us involved in writing this article, are concerned with identifying rules for the design and fabrication of nanoparticles that will limit such negative effects, and hence make the particles sustainable by design. In our article, we propose that the solution of this grand challenge hinges on four critical needs:

1. Chemically Driven Understanding of the Molecular Nature of Engineered Nanoparticles in Complex, Realistic Environments

2. Real-Time Measurements of Nanomaterial Interaction with Living Cells and Organisms That Provide Chemical Information at Nanometer Length Scales To Yield Invaluable Mechanistic Insight and Improve Predictive Understanding of the Nano−Bio Interface.

3. Delineation of Molecular Modes of Action for Nanomaterial Effects on Living Systems as Functions of Nanomaterial Properties

4. Computation and Simulation of the Nano−Bio Interface.

In more accessible terms, this translates to: (1) It’s not enough to know how the nanoparticles behave in a test tube under clean conditions as we need to know how they might behave at the molecular scale in different solutions. (2) We also need to better understand and measure the effects of nanoparticles at contact points between inorganic materials and biological matter. (3) Not only do we need to observe how nanoparticles behave in relation to living systems, but to understand what drives that behavior at a molecular level. (4) In order to accelerate design and discovery as well as to avoid the use of materials whenever possible, we also need to design validated computational models for all of these processes.

Take a look at the article for the details as we collectively offer a blueprint for what research problems need to be solved in the short term (a decade or so), and how our team of nanoscientists, with broad experience in making, measuring, and simulating nanoparticles in complex environments, can make a difference.

The title of the article is "Biological Responses to Engineered Nanomaterials: Needs for the Next Decade.” The work was funded by the NSF as part of the Phase I Center for Sustainable Nanotechnology (CSN, CHE-124051). It was just released at ACS Central Science, XXXX (2015) as an ASAP Article. The author list is C. Murphy, A. Vartanian, F. Geiger, R. Hamers, J. Pedersen, Q. Cui, C. Haynes, E. Carlson, R. Hernandez, R. Klaper, G. Orr, and Z. Rosenzweig,

It’s available as Open Access right now at http://dx.doi.org/10.1021/acscentsci.5b00182

Monday, May 25, 2015

Stretching proteins and myself into open access (OA)

I'm not sure where to side on the Open Access (OA) publishing business. On the one hand, paying for an article to be published is a regression to the days of page charges albeit without the double-bind that readers are also required to pay. On the other hand, it does flatten access to the article, and often panders to enlightened self-interest by way of increased exposure and citations. Indeed, a strong argument in favor of OA for articles, data and code was just published in the Journal of Chemical Physics by my friend, Dan Gezelter. (Fortunately his Viewpoint is OA and readily available.) Regardless, publishers need to cover their costs, and here lies the challenge to the scientific community. The various agencies supporting science do not appear to be increasing funding to subsidize the fees even while they are making policy decisions to require OA. Libraries love OA because it might potentially lower their skyrocketing journal costs, though no substantial lowering appears to have yet occurred. Long story short, my group is now doing the experiment: We recently submitted and just published our work in PLoS (Public Library of Science.) Props to them for being consistent as they also required us to deposit our data in a public site. I was also impressed by the reviewing process which did not appear to be lowered in any way by the presumed conflict-of-interest that a publisher might have to accept papers (and associated cash) from all submissions. The experiment continues as I'll watch to see how our OA article fares compared to our earlier articles on ASMD in more traditional journals.

Meanwhile, we are excited about the work itself. My students, led by outstanding graduate student, Hailey Bureau, validated our staged approach (called adaptive steered molecular dynamics, ASMD) to characterize the energies for pulling a protein apart. The extra wrinkle lies in the fact that the protein is sitting in a pool of water. That increases the size of the calculation significantly as you have to include the thousands (or more) of extra atoms in the pool. The first piece of good news—that we had also seen earlier—is that ASMD can be run for this system using a reasonable amount of computer time. Even better, we found that we could use a simple (mean-field) model for the water molecules to obtain nearly the same energies and pathways. This was a happy surprise because, for the most part, the atoms (particularly the hydrogens) on the protein appear to orient towards the effective solvent as if the water molecules were actually there.

Fortunately, because of OA, you can easily read the details online. The full reference to the article is: H. R. Bureau, D. Merz Jr., E. Hershkovits, S. Quirk and Rigoberto Hernandez, "Constrained unfolding of a helical peptide: Implicit versus Explicit Solvents," PLoS ONE 10, e0127034 (2015). (doi:10.1371/journal.pone.0127034) I'm also happy to say that It was supported by the National Science Foundation.

Meanwhile, we are excited about the work itself. My students, led by outstanding graduate student, Hailey Bureau, validated our staged approach (called adaptive steered molecular dynamics, ASMD) to characterize the energies for pulling a protein apart. The extra wrinkle lies in the fact that the protein is sitting in a pool of water. That increases the size of the calculation significantly as you have to include the thousands (or more) of extra atoms in the pool. The first piece of good news—that we had also seen earlier—is that ASMD can be run for this system using a reasonable amount of computer time. Even better, we found that we could use a simple (mean-field) model for the water molecules to obtain nearly the same energies and pathways. This was a happy surprise because, for the most part, the atoms (particularly the hydrogens) on the protein appear to orient towards the effective solvent as if the water molecules were actually there.

Fortunately, because of OA, you can easily read the details online. The full reference to the article is: H. R. Bureau, D. Merz Jr., E. Hershkovits, S. Quirk and Rigoberto Hernandez, "Constrained unfolding of a helical peptide: Implicit versus Explicit Solvents," PLoS ONE 10, e0127034 (2015). (doi:10.1371/journal.pone.0127034) I'm also happy to say that It was supported by the National Science Foundation.

Tuesday, March 31, 2015

Controlling chemical reactions by kicking their environs

Chemists dream of controlling molecular reactions with ever finer precision. At the shortest chemical length scales, the stumbling block is that atoms don’t follow directions. Instead, we “control” chemical reactions by way of putting the right molecules together in a sequence of steps that ultimately produce the desired product. As this ultimate time scale must be sufficiently short that we will live to see the product, the rate of a chemical reaction is also important. If the atoms don’t quite move in the right directions quickly enough, then we are tempted to direct them in the right way through some external force, such as from a laser or electric field. But even this extra control might not be enough to overcome the fact that the atoms forget the external control because they are distracted by the many molecules around them.

In order to control chemical reactions at the atom scale, we are therefore working to determine the extent to which chemical reaction rates can be affected by driven periodic force. Building on our recent work using non-recrossing dividing surfaces within transition state theory, we succeeded in obtaining the rates of an albeit relative simple model reaction driven by a force that is periodic (but not single frequency!) in the presence of thermal noise. It is critical that the external force is changing the entire environment of the molecule through a classical (long-wavelength) mode and not a specific vibration of the molecule through a quantum mechanical interaction. The latter had earlier been seen to provide only subtle effects at best, but the former can be enough to dramatically affect the rate and pathway of the reaction as we saw in our recent work. Thus while our most recent work is limited by the simplicity of the chosen model, it holds promise for determining the degree of control of the rates in more complicated chemical reactions.

This work was performed by my recently graduated student, Dr. Galen Craven, in collaboration with Thomas Bartsch from Loughborough University. The tile of the article is "Chemical reactions induced by oscillating external fields in weak thermal environments."The work was funded by the NSF, and the international partnership (Trans-MI) was funded by the EU People Programme (Marie Curie Actions). It was just released at J. Chem. Phys. 142, 074108 (2015).

In order to control chemical reactions at the atom scale, we are therefore working to determine the extent to which chemical reaction rates can be affected by driven periodic force. Building on our recent work using non-recrossing dividing surfaces within transition state theory, we succeeded in obtaining the rates of an albeit relative simple model reaction driven by a force that is periodic (but not single frequency!) in the presence of thermal noise. It is critical that the external force is changing the entire environment of the molecule through a classical (long-wavelength) mode and not a specific vibration of the molecule through a quantum mechanical interaction. The latter had earlier been seen to provide only subtle effects at best, but the former can be enough to dramatically affect the rate and pathway of the reaction as we saw in our recent work. Thus while our most recent work is limited by the simplicity of the chosen model, it holds promise for determining the degree of control of the rates in more complicated chemical reactions.

This work was performed by my recently graduated student, Dr. Galen Craven, in collaboration with Thomas Bartsch from Loughborough University. The tile of the article is "Chemical reactions induced by oscillating external fields in weak thermal environments."The work was funded by the NSF, and the international partnership (Trans-MI) was funded by the EU People Programme (Marie Curie Actions). It was just released at J. Chem. Phys. 142, 074108 (2015).

Friday, November 21, 2014

Building Pillars of MesoScale Particles on a Surface

Let’s build layers of (macromolecular) material on a surface. If the material were lego pieces, then the amount of coverage at any given point would be precisely the number that fell there and connected (interlocked). In the simplest cases, you might imagine the same type of construction on a macromolecular scale surface with nano sized bricks. The trouble is that at that small length scale, particles are no longer rigid. The possibility of such softness allows for higher density layers and even a lack of certainty as to which layer you are in. This leads to a roughness in the surface due to the fact that the soft legos stack more in some places than others. Moreover, there will be regions without any legos at all which means that the surface will not be completely covered. This led us to ask: What is the surface coverage as a function of the amount of macromolecular material coated on the surface and the degree of softness of that material?

As in our recent work using tricked-up hard particles, we wondered whether we could answer this question without using explicit soft particle interactions. It does, indeed, appear to work in the sense that we are able to capture the differences in coverage of the surface between a metastable coverage in which particles once trapped at a site remain there, and the relaxed coverage in which particles are allowed to spread across the surface. We also found that relaxation leads to reduced coverage fractions rather than larger coverage as one might have expected a spreading of particles due to the relaxation.

This work was performed by my graduate student, Dr. Galen Craven, in collaboration with a research scientist in my group, Dr. Alex Popov. The title of the article is "Effective surface coverage of coarse grained soft matter.” The work was funded by the NSF. It was published on-line in J. Phys. Chem. B back in July, and I’ve been waiting to write this post hoping that it would hit the presses. Unfrotunately, it’s part of a Special Issue on Spectroscopy of Nano- and Biomaterials which hasn’t quite yet been published. But I hope that it will be soon! Click on the doi link to access the article.

As in our recent work using tricked-up hard particles, we wondered whether we could answer this question without using explicit soft particle interactions. It does, indeed, appear to work in the sense that we are able to capture the differences in coverage of the surface between a metastable coverage in which particles once trapped at a site remain there, and the relaxed coverage in which particles are allowed to spread across the surface. We also found that relaxation leads to reduced coverage fractions rather than larger coverage as one might have expected a spreading of particles due to the relaxation.

This work was performed by my graduate student, Dr. Galen Craven, in collaboration with a research scientist in my group, Dr. Alex Popov. The title of the article is "Effective surface coverage of coarse grained soft matter.” The work was funded by the NSF. It was published on-line in J. Phys. Chem. B back in July, and I’ve been waiting to write this post hoping that it would hit the presses. Unfrotunately, it’s part of a Special Issue on Spectroscopy of Nano- and Biomaterials which hasn’t quite yet been published. But I hope that it will be soon! Click on the doi link to access the article.

Wednesday, September 10, 2014

OXIDE's Case for Inclusive Excellence in Chemistry

There's a catch-22 with any attempt to increase the participation of under-represented groups in the chemical sciences, and perhaps elsewhere too. On the one hand, students need to be trained to enter the workforce. On the other hand, they need to make the choice to be trained, presumably because jobs in the chemical sciences are more desirable than the alternatives. There exist many successful programs aimed at the former, but I believe that such programs face an uphill climb because, on top of everything else, they must convince potential candidates that a career path in the chemical sciences is desirable. Sadly, though the odds for success in professional sports are much lower, it appears to be relatively easy to convince someone to follow that dream through college. When it comes to choosing college majors, however, students tend to be more cautious about potential long-term employment opportunities. This is true for everyone, but it's often acutely so for students from under-represented groups who face the possibility of finding diversity inequities along their career path. An objective of the OXIDE effort is to help break the catch-22 by changing the culture so as to eliminate real or perceived diversity inequities. Our hypothesis is that a culture that is accommodating to everyone will lead to departments with demographics that are in congruence with those of the national population.

Our recent article, "A Top-Down Approach for Increasing Diversity and Inclusion in Chemistry Departments," was roughly based on an invited presentation (by the same title) at the Presidential Symposium held at the 246th National Meeting of the American Chemical Society (ACS) in Indianapolis in September 2013. The symposium topic was the "Impact of Diversity and Inclusion" and included several other presentations that were also turned into peer reviewed articles in the recently published ACS Symposium book. I spoke about the work by Shannon Watt and me through our OXIDE program. She's pictured just to my left in the image accompanying this post. That picture, by the way, was actually taken at the 248th ACS National Meeting held in San Francisco in August 2014. I included this more recent photo with this post because, in addition to Shannon and me, it features several individuals who have been supportive of the OXIDE effort and who also spoke at the recent symposium on "Advancing the Chemical Sciences through Diversity" and thereby continued the dialogue on inclusive excellence at ACS meetings.

The article was written with my collaborator, Shannon Watt. The reference is "A top-down approach for diversity and inclusion in chemistry departments," Careers, Entrepreneurship, and Diversity: Challenges and Opportunities in the Global Chemistry Enterprise, ACS Symposium Series 1169, edited by H. N. Cheng, S. Shah, and M. L. Wu, Chapter. 19, pp. 207-224 (American Chemical Society, Washington DC, 2014). (ISBN13: 9780841229709, eISBN: 9780841229716, doi: 10.1021/bk-2014-1169.ch019) Our OXIDE work is funded by the National Science Foundation, the Department of Energy and the National Institutes of Health through NSF grant #CHE-1048939.

We are also grateful to recent gifts from the Dreyfus Foundation, the Research Corporation for Science Advancement, and a private donor.

Our recent article, "A Top-Down Approach for Increasing Diversity and Inclusion in Chemistry Departments," was roughly based on an invited presentation (by the same title) at the Presidential Symposium held at the 246th National Meeting of the American Chemical Society (ACS) in Indianapolis in September 2013. The symposium topic was the "Impact of Diversity and Inclusion" and included several other presentations that were also turned into peer reviewed articles in the recently published ACS Symposium book. I spoke about the work by Shannon Watt and me through our OXIDE program. She's pictured just to my left in the image accompanying this post. That picture, by the way, was actually taken at the 248th ACS National Meeting held in San Francisco in August 2014. I included this more recent photo with this post because, in addition to Shannon and me, it features several individuals who have been supportive of the OXIDE effort and who also spoke at the recent symposium on "Advancing the Chemical Sciences through Diversity" and thereby continued the dialogue on inclusive excellence at ACS meetings.

The article was written with my collaborator, Shannon Watt. The reference is "A top-down approach for diversity and inclusion in chemistry departments," Careers, Entrepreneurship, and Diversity: Challenges and Opportunities in the Global Chemistry Enterprise, ACS Symposium Series 1169, edited by H. N. Cheng, S. Shah, and M. L. Wu, Chapter. 19, pp. 207-224 (American Chemical Society, Washington DC, 2014). (ISBN13: 9780841229709, eISBN: 9780841229716, doi: 10.1021/bk-2014-1169.ch019) Our OXIDE work is funded by the National Science Foundation, the Department of Energy and the National Institutes of Health through NSF grant #CHE-1048939.

We are also grateful to recent gifts from the Dreyfus Foundation, the Research Corporation for Science Advancement, and a private donor.

Friday, August 22, 2014

Pitching molecular fastballs through a hurricane

The first course I taught at Georgia Tech was a graduate course in statistical mechanics. I quickly discovered that a big barrier for my chemistry students was a lack of understanding of modern classical mechanics. Actually, this has also been a problem for them in learning quantum mechanics too. It might seem odd because classical mechanics is everywhere around you. It is simply the theory that describes the motion of baseballs, pool balls, and rockets. The details of the theory get amazingly complicated as you try to address interactions between the particles. Baseballs don't have such long range interactions so no worries there. But molecules do, and these interactions need to be included to understand the full complexity of molecules in classical (and quantum) mechanical regimes. In order to address this gap, I created a primer on classical mechanics that I have been sharing with my students ever since. But this document was not available to anyone beyond my classroom. So when I was asked to submit a review article on modern molecular dynamics, it was a no-brainier to include my primer at the front of the article so as to bring up readers up to speed on the classical equations of motion beyond Newton's day.

We didn't stop there, of course. The advanced review article, written with my collaborator, Dr. Alex Popov, also describes modern theoretical and computational methods for describing the motion of molecules in extreme environments far from equilibrium (like, for example, a hurricane). There are lots of ways in which this problem involves many moving parts (that is, many variables or degrees of freedom.). One of these involves the approximate separation in the characteristic scales of electrons and nuclei for which the 2013 Nobel Prize in Chemistry was, in part, awarded. Another involves the three-dimensional variables required to describe each atom in every molecule for which there usually are many. Think moles not scores. This increasing complexity gives rise to feedback loops that can explode (just like bad feedback on a microphone-speaker set-up), collapse or do something in between depending on very subtle balances in the interactions. Slowly, but surely, the scientific community is beginning to understand how to address these complex multiscale systems far from equilibrium. In our recent article, we reviewed the current state of the art in this area in the hope that it can help us and others advance the field.

The article was written in collaboration with Alexander V. Popov. The title is "Molecular dynamics out of equilibrium: Mechanics and measurables" and our work was funded by the National Science Foundation. It was recently made available on-line in WIREs Comput. Mol. Sci. 10.1002/wcms.1190, "Early View" (2014), and should be published soon.

We didn't stop there, of course. The advanced review article, written with my collaborator, Dr. Alex Popov, also describes modern theoretical and computational methods for describing the motion of molecules in extreme environments far from equilibrium (like, for example, a hurricane). There are lots of ways in which this problem involves many moving parts (that is, many variables or degrees of freedom.). One of these involves the approximate separation in the characteristic scales of electrons and nuclei for which the 2013 Nobel Prize in Chemistry was, in part, awarded. Another involves the three-dimensional variables required to describe each atom in every molecule for which there usually are many. Think moles not scores. This increasing complexity gives rise to feedback loops that can explode (just like bad feedback on a microphone-speaker set-up), collapse or do something in between depending on very subtle balances in the interactions. Slowly, but surely, the scientific community is beginning to understand how to address these complex multiscale systems far from equilibrium. In our recent article, we reviewed the current state of the art in this area in the hope that it can help us and others advance the field.

The article was written in collaboration with Alexander V. Popov. The title is "Molecular dynamics out of equilibrium: Mechanics and measurables" and our work was funded by the National Science Foundation. It was recently made available on-line in WIREs Comput. Mol. Sci. 10.1002/wcms.1190, "Early View" (2014), and should be published soon.

Wednesday, August 20, 2014

LiCN taking a dip in an Ar bath

We all know that it’s easier to move through air than water. Changing the environment to molasses means that you’ll move even slower. Thus it’s natural to think that the thicker (denser) the solvent (bath), the slower a particle will swim through it. More precisely, what matters is not the density but the degree to which the moving particle interacts with the solvent, and this can be described through the friction between the particle and the fluid. Chemical reactions have long known to be increasingly slower with increasing friction. The problem with this seemingly simple concept is that Kramers showed long ago that there exists a regime (when the surrounding fluid is very weakly interacting with the particle) in which the reactions actually speed up with increasing friction. This crazy regime arises because reactants need energy to surmount the barriers leading to products, and they are unable to get this energy from the solvent if their interaction is very weak. A small increase of this weak interaction facilitates the energy transfer, and voila the reaction rate increases. What Kramers didn’t find is a chemical reaction which actually exhibits this behavior, and the hunt for such a reaction has long been on…

A few years ago, my collaborators in Madrid and I found a reaction that seems to exhibit a rise and fall in chemical rates with increasing friction. (I wrote about one of my visits to my collaborators in Madrid in a previous post.) It involves the isomerization reaction from LiCN to CNLi where the lithium is initially bonded to the carbon, crosses a barrier and finally bonds to the nitrogen on the other side. We placed it inside an argon bath and used molecular dynamics to observe the rate. Our initial work fixed the CN bond length because that made the simulation much faster and we figured that the CN vibrational motion wouldn’t matter much. But the nagging concern that the CN motion might affect the results remained. So we went ahead and redid the calculations releasing the constraint on the CN motion. I’m happy to report that the rise and fall persisted. As such the LiCN isomerization reaction rate is fastest when the density of the Argon bath is neither too small nor too large, but rather when it is just right.

The article with my collaborators, Pablo Garcia Muller, Rosa Benito and Florentino Borondo was just published in the Journal of Chemical Physics 141, 074312 (2014), and may be found at this doi hyperlink. This work was funded by the NSF on the American side of the collaboration, by Ministry of Economy and Competiveness-Spain and ICMAT Severo Ochoa on the Spanish side, and by the EU’s Seventh Framework People Exchange programme.

A few years ago, my collaborators in Madrid and I found a reaction that seems to exhibit a rise and fall in chemical rates with increasing friction. (I wrote about one of my visits to my collaborators in Madrid in a previous post.) It involves the isomerization reaction from LiCN to CNLi where the lithium is initially bonded to the carbon, crosses a barrier and finally bonds to the nitrogen on the other side. We placed it inside an argon bath and used molecular dynamics to observe the rate. Our initial work fixed the CN bond length because that made the simulation much faster and we figured that the CN vibrational motion wouldn’t matter much. But the nagging concern that the CN motion might affect the results remained. So we went ahead and redid the calculations releasing the constraint on the CN motion. I’m happy to report that the rise and fall persisted. As such the LiCN isomerization reaction rate is fastest when the density of the Argon bath is neither too small nor too large, but rather when it is just right.

The article with my collaborators, Pablo Garcia Muller, Rosa Benito and Florentino Borondo was just published in the Journal of Chemical Physics 141, 074312 (2014), and may be found at this doi hyperlink. This work was funded by the NSF on the American side of the collaboration, by Ministry of Economy and Competiveness-Spain and ICMAT Severo Ochoa on the Spanish side, and by the EU’s Seventh Framework People Exchange programme.

Monday, August 18, 2014

Taming the multiplicity of pathways in the restructuring of proteins

The average work to move molecular scale objects closer together or further apart is difficult to predict because the calculation depends on the many other objects in the surroundings. For example, you might find if easy to cross a four-lane street of grid-locked cars (requiring a small amount of work) but nearly impossible to cross a highway with cars speeding by at 65mph (requiring a lot of work.) When the system is a protein whose overall structure is expanded or contracted, there exist a myriad possible configurations which must be included to obtain the average required work. Such a calculation is computationally expensive and likely inefficient. Instead, we have been using a method (steered molecule dynamics) developed by Schulten and coworkers based on Jarzynski's equality. It helps us compute the equilibrium work using (driven) paths far outside of equilibrium. The trouble is that the surroundings get in the way and drive the system along paths that get out of bounds quickly.

In previous work, we tamed these naughty paths by reigning them all in to a tighter region of structures. Unfortunately, this may be too aggressive. The key is to realize that not all paths are naughty. That is, that there may be more than one region of structures that contribute significantly to the calculation of the work. We found that we could include such not-quite-naughty paths and still maintain the efficiency of our adaptive steered molecular dynamics. I described the early work on ASMD in my recent ACS Webinar.

This research project was truly performed in collaboration. My recent graduate student, Gungor Ozer, did the work while he was a postdoc at Boston University. Tom Keyes hosted him there and gave great insight on the sampling approach. Stephen Quirk, as always, grounded us in the biochemistry.

The title of the article is "Multiple branched adaptive steered molecular dynamics.” The work was funded by the NSF. It was just released at J. Chem. Phys. 141, 064101 (2014). Click on the JCP link to access the article.

In previous work, we tamed these naughty paths by reigning them all in to a tighter region of structures. Unfortunately, this may be too aggressive. The key is to realize that not all paths are naughty. That is, that there may be more than one region of structures that contribute significantly to the calculation of the work. We found that we could include such not-quite-naughty paths and still maintain the efficiency of our adaptive steered molecular dynamics. I described the early work on ASMD in my recent ACS Webinar.

This research project was truly performed in collaboration. My recent graduate student, Gungor Ozer, did the work while he was a postdoc at Boston University. Tom Keyes hosted him there and gave great insight on the sampling approach. Stephen Quirk, as always, grounded us in the biochemistry.

The title of the article is "Multiple branched adaptive steered molecular dynamics.” The work was funded by the NSF. It was just released at J. Chem. Phys. 141, 064101 (2014). Click on the JCP link to access the article.

Wednesday, July 30, 2014

Getting to the shore when riding a wave from reactant to product

Suppose that you were trying to get across a floor that goes side to side like a wave goes up and down. If you started on the start line (call it the reactants), how long would it take you to cross to the finish line (call it the products) on the other side? In earlier work, we found “fixed” structures that could somehow tell you exactly when you were on the reactant and product sides even while the barrier was waving side to side. Just like you and I would avoid getting seasick while riding such a wave, the “fixed” structure has to move, but just not as much as the wave. So the structure of our dividing surface is “fixed” in the sense that our planet is always on the same orbit flying around the sun. This latter analogy can’t be taken too far because we know that our planet is thankfully stable. In the molecular case, the orbit is not stable. We just discovered that the rates at which molecular reactants move away from the dividing surface can be related to the reaction rate between reactant and products in a chemical reaction. (Note that the former rates are called Floquet exponents.) This is a particularly cool advance because we are now able to relate the properties of the moving dividing surface directly to chemical reactions, at least for this one simplified class of reactions.

The work involved a collaboration with Galen Craven from my research group and Thomas Bartsch from Loughborough University. The title of the article is "Communication: Transition state trajectory stability determines barrier crossing rates in chemical reactions induced by time-dependent oscillating fields.” The work was funded by the NSF, and the international partnership (Trans-MI) was funded by the EU People Programme (Marie Curie Actions). It was just released as a Communication at J. Chem. Phys. 141, 041106 (2014). Click on the JCP link to access the article.

The work involved a collaboration with Galen Craven from my research group and Thomas Bartsch from Loughborough University. The title of the article is "Communication: Transition state trajectory stability determines barrier crossing rates in chemical reactions induced by time-dependent oscillating fields.” The work was funded by the NSF, and the international partnership (Trans-MI) was funded by the EU People Programme (Marie Curie Actions). It was just released as a Communication at J. Chem. Phys. 141, 041106 (2014). Click on the JCP link to access the article.

Wednesday, June 18, 2014

Soft materials made up of tricked-up hard particles

Materials are made of smaller objects which in turn are made of smaller objects which in turn… For chemists, this hierarchy of scales usually stops when you eventually get down to atoms. However, well before that small scale, we treat some of these objects as particles (perhaps nano particles or colloids) that are clearly distinguishable and whose interactions may somehow be averaged (that is, coarse-grained) over the smaller scales. This gives rise to all sorts of interesting questions about how they are made and what they do once made. One of these questions concerns the structure and behavior of these particles if their mutual interactions is soft, that is when they behave as squishy balls when they get close to each other and unlike squishy balls continue to interact even when they are far away. This is quite different from hard interactions, that is when they behave like billiard balls that don’t overlap but don’t feel each other when they aren’t touching.

I previously blogged about our work showing that in one-dimension, we could mimic the structure of assemblies of soft particles using hard particles if only the latter were allowed to overlap (ghostlike) with some prescribed probability. In one dimension, this was like looking at a system of rods on a line. We wondered whether this was also possible in two dimensions (disks floating on a surface) or in three dimensions (balls in space). In our recent article, we confirmed that this overlapping (i.e. interpenetrable) hard-sphere model does indeed mimic soft particles in all three dimensions. This is particularly nice because the stochastic hard-sphere model is a lot easier to simulate and to solve using theoretical/analytical approaches. For example, we found a formula for the effective occupied volume directly from knowing the “softness” in the stochastic hard-sphere model.

The work was done in collaboration with my group members, Galen Craven and Alexander V. Popov. The title is "Structure of a tractable stochastic mimic of soft particles" and the work was funded by the National Science Foundation. It was released just this week at Soft Matter, 2014, Advance Article (doi:10.1039/C4SM00751D). It's already available as an Advance Article on the RSC web site, though this link should remain valid once it is formally printed.

I previously blogged about our work showing that in one-dimension, we could mimic the structure of assemblies of soft particles using hard particles if only the latter were allowed to overlap (ghostlike) with some prescribed probability. In one dimension, this was like looking at a system of rods on a line. We wondered whether this was also possible in two dimensions (disks floating on a surface) or in three dimensions (balls in space). In our recent article, we confirmed that this overlapping (i.e. interpenetrable) hard-sphere model does indeed mimic soft particles in all three dimensions. This is particularly nice because the stochastic hard-sphere model is a lot easier to simulate and to solve using theoretical/analytical approaches. For example, we found a formula for the effective occupied volume directly from knowing the “softness” in the stochastic hard-sphere model.

The work was done in collaboration with my group members, Galen Craven and Alexander V. Popov. The title is "Structure of a tractable stochastic mimic of soft particles" and the work was funded by the National Science Foundation. It was released just this week at Soft Matter, 2014, Advance Article (doi:10.1039/C4SM00751D). It's already available as an Advance Article on the RSC web site, though this link should remain valid once it is formally printed.

Monday, May 5, 2014

Stability within field induced barrier crossing (#APSphysics #PRE #justpublished)

Suppose that a 5' foot wall stood between you and your destination. In order to determine if and when you got to the other side, all you'd have to do is stand at the top of the wall and check when you got there. (Presumably falling down to the other side from the top would be a lot easier than getting to the top.) If, instead, there was a large mob of people trying to get across the wall, we'd have to keep track of all of them, but again only as to when each got to the top of the wall. This kind of calculation is called transition state theory when the people are molecules and the wall is the energetic barrier to reaction. The key concept is that the structure—that is, geometry—of the barrier determines the rate, and this geometry doesn't move.

If the wall were to suddenly start to slide towards and away from where you were first standing, then it might not be so easy to stay on top of it as you tried to cross over. Certainly, an observer couldn't just keep their eyes fixed to a point between the ends of the room because the wall would be in any one spot only for a moment. So is there still a way to follow when the reactants have gotten over the wall—that is, that they are reactants—in the crazy case when the barrier is being driven back-and-forth by some outside force? My student Galen Craven, our collaborator Thomas Bartsch (from Loughborough University), and I found that there is indeed such a way. The key is that you now have to follow an oscillating point at the same frequency as the barrier but not quite that of the top of the barrier. In effect, if the particle manages to cross this oscillating point, even if it hasn't quite crossed over the barrier, you can safely say that it is now a product. There is one crazy path, though, for which the particle follows this point and never leaves it. In this case, it would be like Harry Potter at King's Cross station never choosing to live or die. That's the stable path that we found in the case of field induced barrier crossing.

The title of the article is "Persistence of transition state structure in chemical reactions driven by fields oscillating in time." The work was funded by the NSF, and the international partnership (Trans-MI) was funded by the EU People Programme (Marie Curie Actions). It was released recently as a Rapid Communication at Phys. Rev. E. 89, 04801(R) (2014). Click on the PRE Link to access the article.

If the wall were to suddenly start to slide towards and away from where you were first standing, then it might not be so easy to stay on top of it as you tried to cross over. Certainly, an observer couldn't just keep their eyes fixed to a point between the ends of the room because the wall would be in any one spot only for a moment. So is there still a way to follow when the reactants have gotten over the wall—that is, that they are reactants—in the crazy case when the barrier is being driven back-and-forth by some outside force? My student Galen Craven, our collaborator Thomas Bartsch (from Loughborough University), and I found that there is indeed such a way. The key is that you now have to follow an oscillating point at the same frequency as the barrier but not quite that of the top of the barrier. In effect, if the particle manages to cross this oscillating point, even if it hasn't quite crossed over the barrier, you can safely say that it is now a product. There is one crazy path, though, for which the particle follows this point and never leaves it. In this case, it would be like Harry Potter at King's Cross station never choosing to live or die. That's the stable path that we found in the case of field induced barrier crossing.

The title of the article is "Persistence of transition state structure in chemical reactions driven by fields oscillating in time." The work was funded by the NSF, and the international partnership (Trans-MI) was funded by the EU People Programme (Marie Curie Actions). It was released recently as a Rapid Communication at Phys. Rev. E. 89, 04801(R) (2014). Click on the PRE Link to access the article.

Tuesday, October 29, 2013

Advancing Science in Tandem with Colleagues in Japan… (#ACS #PRE #justpublished)

Sometimes features in my projects appear to come in waves. Intellectual discourse with distant groups appears to be a running theme at the moment. As with our recent work on roaming reactions, my recent article in Physical Review E involves a bit of back and forth with colleagues around the world. This time, it's my friends Kawai and Komatsuzaki at Hokaido University.

Since the start of my independent research career, I have been working on developing a series of models to describe the motion of particles in solvents that change with time. It's like trying to describe how a returner will run during a football kick-off without fully specifying the details of where all the blockers are and will be. The truth is that the blockers will move according to how the kick returner moves. This coordinated response between the chosen system—the returner or a molecule—and it's environment is not so easy to describe, and has been the object of much of the NSF-funded work by our group. We have managed to develop several models using stochastic differential equations that allow us to include such coupled interactions to varying degrees. While we were able to describe the response of the blockers to the returner in ever more complicated ways, for the most part they never seemed willing to talk to each other. A few years ago, Kawaii and Komatsuzaki found a formal way to extend the environment—that is, the blockers—so that they are able to affect each other as they respond to the system motion. In order to do this, though, you have to make some strong assumptions about the environment that are hard to satisfy for typical systems. In our latest paper, Alex Popov and I show that the our formalism is able to capture some of the generality of their model while still accommodating more general particles and environments.

The discussion between our two groups is a bit technical, but the back-and-forth is helping us all advance our understanding of the theory as well as enable its applications. The discourse is also not restricted to paper (in ink or bits) as our groups are now meeting regularly at workshops (such as in Telluride), and at our respective institutions (such as in my upcoming visit to Hokkaido university.) Again, it's the opportunity for open discourse that makes it fun to keep advancing science!

The title of the article is "The T-iGLE can capture the nonequilibrium dynamics of two dissipated coupled oscillators," and the work was funded by the NSF. It was released recently at Phys. Rev. E, 88, 032145 (2013). Click on http://dx.doi.rog/:10.1103/PhysRevE.88.032145 to access the article.

Friday, October 4, 2013

Celebrating Diversity at GT

Two weeks ago, Georgia Tech celebrated the advances we've made in creating an atmosphere of inclusive excellence on our campus. (Given the time delay, it should be clear that I'm still catching up on my blog posting and everything thing else!) Only three years ago, the office of the Vice-President for Institutional Diversity (VPID) was created. Dr. Archie Ervin was appointed the first VPID (as announced by President Peterson on October 5, 2013). It was a privilege for me to be involved in the search for his position. He had us at "hello" and he's been catalyzing our community ever since. He's built a staff of extremely talented people. He's created much needed mechanisms to coordinate the many diversity activities that had already been taking place on campus. Not only is the sum greater than the parts, but it has allowed for the creation of new policies and programs to improve the atmosphere on campus. The now-annual Diversity Symposium is a capstone bringing attention to these advances.

The keynote speaker was Dr. France Córdova. She's a true rocket scientist, having been appointed as NASA's Chief Scientist in 1993. She's the former President of Purdue University, and she's currently working at the Smithsonian. Through this latter position, she has a connection to Georgia Tech. Her boss, Dr. Wayne Clough, is the much beloved President who preceded President Peterson. Interestingly, she's presently not accepting speaking invitations. She honored ours only because she had accepted the invitation prior to Obama's announcement that she will be the next Director of the National Science Foundation subject to Congress's approval. Evidently inclusive excellence has to be timely, too! Dr. Córdova's message was simple. We need to increase the public's science literacy and awareness. As evidence, she shared her personal story and directed us to the recent National Academy's report on "Changing the conversation: Messages for Improving Public Understanding of Engineering." Notably, the chair of the committee that wrote the report was Georgia Tech's own former Dean of the School of Engineering, Don Giddens. Why is this message relevant to a Diversity Symposium? Because one of the biggest obstacles to inclusive excellence is the fact that not too few children are dreaming of becoming a scientist. Sadly, the barriers to science don't stop there. Progressing through his or her career, the realization of the dream to advance science tends to be obstructed in ways that affect people from different backgrounds inequitably. What Georgia Tech is doing to change the atmosphere on campus is critical to lowering such obstacles for everyone. For example, students and faculty presented with clear and transparent guidelines for success (in facing tests or tenure promotion, respectively), are thereby provided with the confidence (in the system) necessary to be successful regardless of their diversity make-up. The presence of such success stories, in turn, makes it easier for young people to see that the STEM career pathway is truly accessible to them.

Tuesday, May 7, 2013

Politicians don't do science, Scientists do (#NSF #FundScience)

As reported in ScienceInsider, Rep. Lamar Smith (R-TX) would like to draft the "High Quality Research Act" that would rewrite the criteria that the National Science Foundation (NSF) uses to assess research grants. (Look also at an op-ed in the Huffington Post.) The proposed language suggests a desire for immediacy to the impact of a given scientific effort directly on the public as well as a lack of flexibility in the degree to which the research may be pursued. Moreover, his recent actions also suggest a desire to have a political review of research grants beyond the traditional merit review performed within the scientific community. The NSF has necessarily responded to this political attack by countering with political tactics such as stonewalling. One of their main arguments has been that the review criteria just adopted in the past 6 months had gone through significant vetting, and therefore should not be reconsidered at this time. The NSF is also arguing that piecemeal reevaluation of individual grants by politicians undermines the peer review process, not to mention that they would require Congressional oversight at a microlevel that Congress has presumably empowered the NSF to act on. Clearly such review would be at best pennywise and certainly pound foolish.

To most scientists, such discussion is opaque because it seems to be directing the focus of the discussion from a fundamental academic point. That is, the progress of science is not a straight line. It's a highly connected (likely scale-free) network with new discoveries often dependent on advances in distant arms of science. That's the reason why we need to allow for science discovery broadly rather than attempt to pre-select the winners today. For example, medical schools decades ago would not have funded and did not fund the development of lasers by chemists and physicists or the development of control theories by mathematicians and engineers. Without such advances, we wouldn't have refractive eye surgery or laser scalpels. That is, if we use the current dogma to pick the best new science with immediate impact, we will never break from its paradigm. The fact that this intellectual argument doesn't win with some politicians is simply a reflection that scientists don't do politics.

The irony in all this is that basic science is working for our country. The return on the investment of basic science is at worst even (dollar-for-dollar) and as much as a factor of 100 in GDP per $1 spent on the NSF, depending on how the ROI is calculated. The Congressional Budget Office (CBO) specifically states that "federal spending in support of basic research over the years has, on average, had a significantly positive return, according to the best available research." The science in universities is generating countless companies. (For example, according to this Boston Magazine article the entrepreneurial spirit is alive and well at universities like MIT which is among the leaders of the digital age. According to Forbes Magazine, my own institution is in the top 10 of incubators as well!) The rest of the world, particularly China, has noticed this, and several countries are increasing—if not outright outspending the U.S.—their investments in basic research (in terms of percentage of their GDP). It's often quoted that peer review is not ideal (and this is particularly true when the system is stressed to funding levels well below 20%), but that it's the best system we have. It's hard to argue against this given our track record for driving the economy.

So please tell politicians to keep doing the politics and to keep funding scientists to do the science. Our nation will continue to advance much better that way!

To most scientists, such discussion is opaque because it seems to be directing the focus of the discussion from a fundamental academic point. That is, the progress of science is not a straight line. It's a highly connected (likely scale-free) network with new discoveries often dependent on advances in distant arms of science. That's the reason why we need to allow for science discovery broadly rather than attempt to pre-select the winners today. For example, medical schools decades ago would not have funded and did not fund the development of lasers by chemists and physicists or the development of control theories by mathematicians and engineers. Without such advances, we wouldn't have refractive eye surgery or laser scalpels. That is, if we use the current dogma to pick the best new science with immediate impact, we will never break from its paradigm. The fact that this intellectual argument doesn't win with some politicians is simply a reflection that scientists don't do politics.

The irony in all this is that basic science is working for our country. The return on the investment of basic science is at worst even (dollar-for-dollar) and as much as a factor of 100 in GDP per $1 spent on the NSF, depending on how the ROI is calculated. The Congressional Budget Office (CBO) specifically states that "federal spending in support of basic research over the years has, on average, had a significantly positive return, according to the best available research." The science in universities is generating countless companies. (For example, according to this Boston Magazine article the entrepreneurial spirit is alive and well at universities like MIT which is among the leaders of the digital age. According to Forbes Magazine, my own institution is in the top 10 of incubators as well!) The rest of the world, particularly China, has noticed this, and several countries are increasing—if not outright outspending the U.S.—their investments in basic research (in terms of percentage of their GDP). It's often quoted that peer review is not ideal (and this is particularly true when the system is stressed to funding levels well below 20%), but that it's the best system we have. It's hard to argue against this given our track record for driving the economy.

So please tell politicians to keep doing the politics and to keep funding scientists to do the science. Our nation will continue to advance much better that way!

Subscribe to:

Posts (Atom)